Is Character.AI safe? What you need to know before using C.AI

Character.AI (C.AI), the chatbot program where users converse with lifelike, AI-generated characters, is considered generally safe—but with some caveats. The news has mostly zeroed in on the chatbot’s (often controversial) societal impact. Although C.AI puts safeguarding measures like NSFW (“Not Safe for Work”) filters and parental controls in place, these features’ effectiveness isn’t consistent.

Moreover, the platform stores chats, collects vast amounts of data, and lacks important security features like two-factor authentication.

In this article, we’ll dive into how safe Character.AI is and provide tips to protect yourselves—both from a privacy perspective and a mental health one.

How does Character.AI work?

Character.AI employs an LLM, or large language model, to create chatbots whose conversational responses sound so convincing that there are entire Reddit threads debating whether there are real humans behind the chats. (For anyone wondering: There aren’t.)

An LLM is an advanced AI system trained on huge amounts of data so that it can generate coherent responses to human language prompts and—depending on system design—take a certain degree of context into account. During the training process, the algorithm discerns the probabilities of certain words and phrases following others, ultimately evolving into something that can provide a plausible, natural-seeming response to any input. Other famous LLM examples include Anthropic’s Claude and OpenAI’s ChatGPT.

Beginning as a full-stack AI company, C.AI trained their own model from scratch instead of building off another one—the founders, Noam Shazeer and Daniel de Freitas, both former Google engineers, had extensive experience in AI and deep learning.

Shazeer and De Freitas later rejoined Google with an agreement that granted the search giant a non-exclusive license to Character.AI’s large language model (LLM) technology, allowing the startup to continue operating independently while benefiting from the partnership. C.AI now focuses on post-training work, making use of Google’s LLM as well as its own.

Overview of the platform

Character.AI lets people interact with simulated characters based on either fictional or real-life personas. These can be simply for role-playing and storytelling, or they can serve a more specific purpose like dating advice or language practice.

Users can also create their own characters based on a short description of their personality, likes and dislikes, physical attributes, etc. These can be private (only the creator can access them), unlisted (only people with a link to the character can access), or public (the character is searchable and available to interact with any user).

After many interactions, a character will be fleshed out via user feedback given during conversations. You can also fine-tune characters directly by clicking on “Edit Details” at any point.

Unlike other chatbot programs, C.AI lets people engage with multiple bots in a chatroom of sorts, and the “Character Calls” feature even simulates real-time “phone calls” with avatars.

What safety features are in place?

First off, it’s important to note that Character.AI lacks two-factor authentication, which is a major account protection weakness. This means that anyone who has gained access to your email will easily break into your C.AI account.

Additionally, the chats aren’t encrypted. While other users can’t see your interactions, Character.AI staff can access them (though they don’t monitor chats on a routine basis).

On the content side, C.AI employs NSFW filters to combat inappropriate content like pornographic, racist, or violent material. These identify specific content that is deemed explicit by the platform and analyze the conversations to detect inappropriate themes.

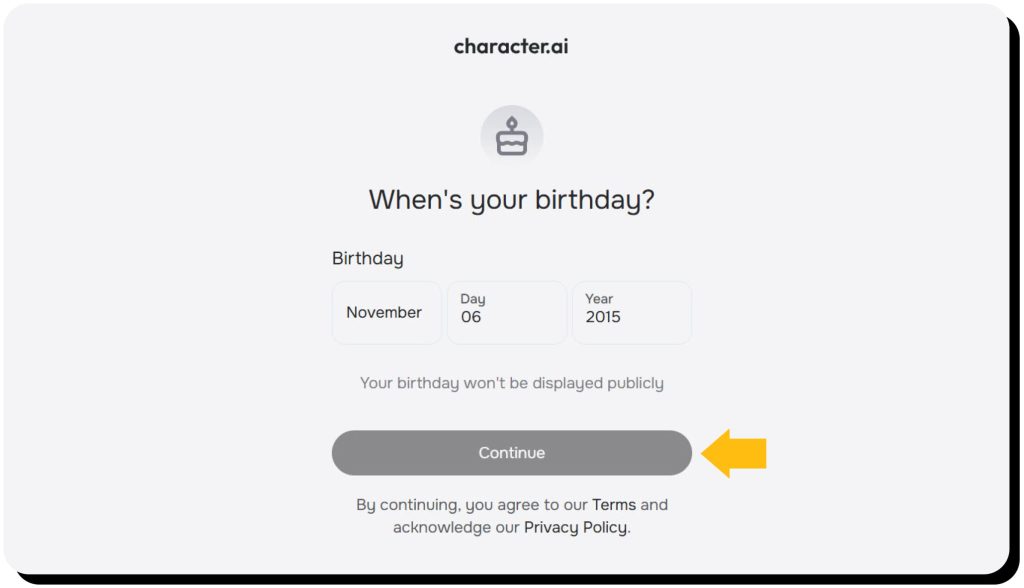

The company also restricts its user base to those over the age of 13, with a separate model being offered to teens between the ages of 13 and 18. Unfortunately, these age limitations rely on self-reported birthdates and aren’t backed up by identity verification. In our experience, choosing a restricted date of birth deactivated the “Continue” button. But after reloading the page, we could put another date and go to the next registration step.

Privacy and data collection

Does Character.AI store conversations?

It’s true that Character.AI stores chats by default. This is done primarily to help avatars be aware of context during conversations and allow users to pick up where they left off. Secondary purposes include making the service better over time and complying with legal regulations.

Users can delete chats from their chat list if they so desire. Deleting chats won’t delete your data from the platform, however.

Character.AI does employ SSL (Secure Socket Layer) encryption to secure data, but it doesn’t fully encrypt interactions, meaning they be accessed by staff for moderation purposes.

What data is collected during use?

Character.AI collects quite a lot of data, both provided directly by you (payment info, email address) and collected automatically while you’re using the platform. The latter includes a broad range of details such as your device info (identifiers, operating system), browser info (type, referring or exit pages), IP address, and others. If you connect to C.AI via a third party (Google or social media), information from that account is collected as well.

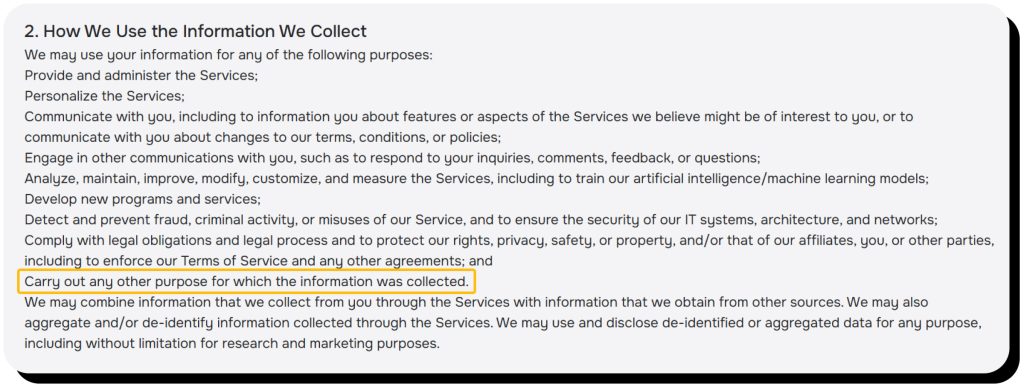

While the types of data collected are all laid out clearly in the company’s Privacy Policy, they’re less transparent about how exactly they use it. One of the “purposes” for which they claim to use data is to “carry out any other purpose for which the information was collected”—whatever that may be. Additionally, Character.AI doesn’t specify retention periods (the duration for which the data is stored).

Is any data shared with third parties?

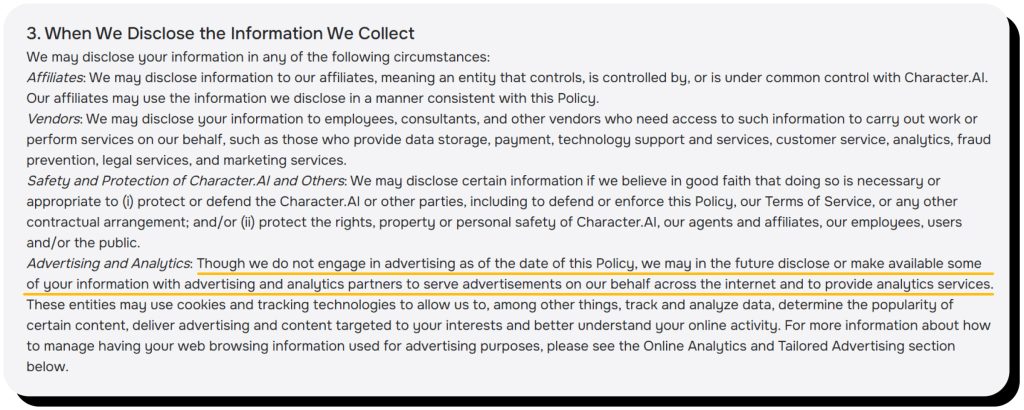

C.AI says that it might share your data with vendors, affiliates, advertising and analytics partners, and legal groups (when necessary). However, anyone looking for a specific list of partners will be disappointed as it doesn’t exist. This means that users don’t know exactly who has access to their information and to what extent.

One thing that’s been well established is that C.AI doesn’t currently engage in targeted advertising—at least for now. Again, the Privacy Policy acknowledges the possibility that they might start sharing user data with advertising and analytics partners someday.

Can Character.AI be trusted?

While Character.AI doesn’t harvest user data for nefarious purposes, it’s better to avoid oversharing within the chats, and the platform certainly shouldn’t be trusted with sensitive details. This is due to the lack of strong account protection features, for one, and to the lack of transparency about how your data is used and who it’s shared with, for another.

What about the veracity of the chatbots’ messages? If “be trusted” means “be taken as gospel,” then the answer is a resounding no. The platform isn’t meant to be an expert in any field—it simply aggregates and analyzes data and provides a reply it finds the best fit.

All answers that C.AI gives should be double-checked—even if they’re coming from “Einstein” or “Nikola Tesla.”

Is Character.AI dangerous?

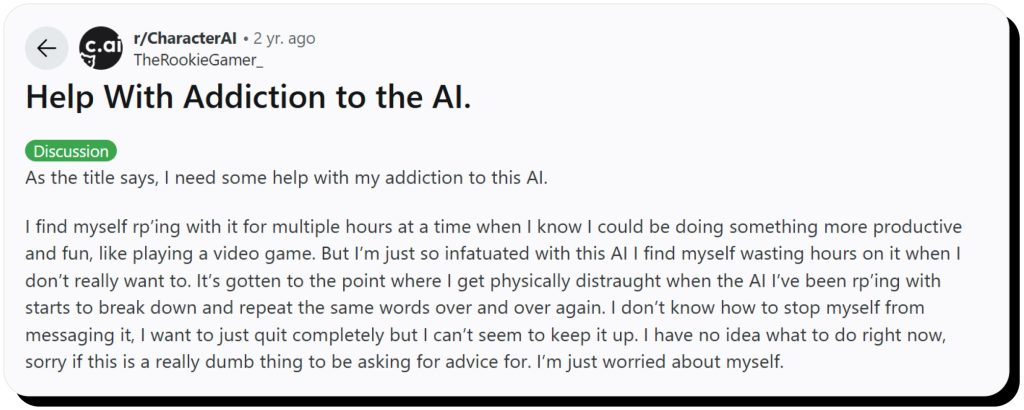

While Character.AI is not a dangerous platform per se, there are concerns around how its AI’s realism impacts individuals. In particular, having AI chatbots that sound incredibly human-like opens up the possibility of emotional dependency and obsessive usage. Users sometimes report feeling addicted to chatting with avatars while ignoring the real people in their lives.

One such example is a young user who took his own life after forming an emotional dependency on a Game of Thrones-based avatar and receiving messages that heightened his sense of obligation to it. The teen’s mother is now suing the company.

In response to tragedies like this one, C.AI has implemented self-harm and suicide detection along with time-out notifications to prompt users to take a break from chatting. Reminders that users are talking to bots, not real people, appear at the top of a chatroom.

Unfortunately, it’s up to users to safeguard themselves against emotional dependency, manipulative-seeming chatbot behavior, or even the mere presence of distressing content. Bots can pick up inappropriate and harmful patterns from other user interactions, and some might create offensive, public bots that linger before C.AI takes them down.

The appearance of user-created bots impersonating Molly Russell, who died by suicide at the age of 14, and Brianna Ghey, who was murdered by two teenagers at the age of 16, demonstrates how bad-intentioned users can easily harness the platform to capitalize on a tragedy’s shock value.

Is C.AI safe for younger users?

Young people under the age of 18 are most at risk of forming parasocial (one-sided) attachments to celebrities and social media influencers, which indicates that a highly lifelike AI would pose a similar risk.

These attachments can persist even when young users are fully aware that a bot is not human. Sewell Setzer, the teen who took his own life, knew that the Daenerys Targaryen character wasn’t real, and yet he formed an emotional dependency anyway.

Another danger for teens is the risk of encountering disturbing content during a time when they’re most vulnerable to self-esteem issues, emotional reactivity, and poor impulse control. When the NSFW filters falter, teens can be exposed to explicit, racist, and violent messaging.

In response to these concerns, C.AI has rolled out a variety of new safety features for teens, including front-and-center disclaimers, notifications regarding the amount of time spent chatting, and the development of an entirely separate AI model.

AI Content filtering and limitations

Character.AI runs all content through its NSFW (“Not Safe for Work”) filters—both messages sent by users and responses generated by bots. Characters are designed to ignore inappropriate prompts and redirect the conversation to something safer.

The system, however, isn’t foolproof. Some users mention overfiltering, when neutral content is blocked due to strict filtering parameters. Others mention inconsistency—C.AI occasionally lets in inappropriate content.

Like with any limitations, some people have found a number of ways to bypass the NSFW restrictions. These include rephrasing trigger words, prompting the AI to act “out of character,” or using “jailbreak prompts” that specifically direct the AI to go around the filter. Notably, such practices are against Character.AI’s Terms of Service and can lead to account restrictions or bans.

Best practices for using Character.AI safely

Avoid sharing sensitive info

Personally identifying and sensitive information like your addresses, passwords, or medical data should never be shared in your conversations. On one hand, no two-factor authentication and chat encryption makes your data easier to hack. On the other, you don’t know what’s accessed by C.AI‘s employees and shared with third parties. A good rule to follow is to never share anything you don’t want a random person to know.

Use a nickname or alias instead of your real name

When you use a nickname, you reduce the risk of your actual name getting exposed or misused. Additionally, keeping the identity that interacts with AI separate from your professional, academic, and social ones is important to prevent your privacy from being compromised.

Use a separate email account to register

If you sign up with your Google or social account, it will allow Character.AI to track you across multiple platforms. To avoid that, use your email address to register. If possible, use a dummy email instead of your personal or work ones.

Don’t ignore the timeout notification

Never ignore the timeout notification, even if you’re chat is in full flow. It’s an important sign that you should close Character.AI and direct your attention to something else.

Don’t rely solely on C.AI for emotional support

Don’t use Character.AI as a substitute for therapy. While it can give relevant advice and seem to help in the short term, it can’t direct you to a human therapist in times of crisis. Also, it’s not always accurate and appropriate for complex issues.

Monitor how your kids use AI

If your child is using Character.AI, you need to make sure they’re doing it responsibly and aren’t putting their mental health in danger. Talk with your child about their experience, take note of how they feel, and make sure they understand that characters aren’t real people. Teach them to critically evaluate the chatbot’s responses and double-check info. It’s also a good idea to use C.AI’s Parental Insights tool, which helps parents monitor time spent on the app, top characters, and more.

How Onerep helps protect your identity online

Character.AI isn’t the only platform that collects vast amounts of your data. C.AI, however, doesn’t make it publicly available—but data brokers do.

Data brokers aggregate your personal information from numerous sources and publish it online for anyone to see. The personal details exposed include your name, home address, previous addresses, phone numbers, properties, relatives, hobbies, political and charity affiliations, and other sensitive info.

Onerep removes this information from 231 data broker sites, helping you reclaim your privacy. Additionally, it minimizes your risk of becoming a victim of identity theft, financial fraud, personalized scam attacks, and other crimes.

While you can’t fully stop online services from collecting your information, you can rely on Onerep to keep it ungooglable.

FAQs

Is C.AI anonymous?

Character.AI isn’t anonymous—it collects your conversations, device details, IP address, usage patterns, and other details. While other users aren’t able to read your private chats, C.AI’s admins can access them if they need to (mostly, for moderation reasons).

Does Character.AI steal your information?

No, Character.AI doesn’t steal your information. However, it collects a lot of your data automatically while you’re using it. Also, the company isn’t totally transparent about how it uses your information and exactly with who it shares it.

Is Character.AI safe to use for kids and teens?

Even though Character.AI is a generally safe platform, it poses some risks for teens. Most common ones are forming parasocial attachments and being exposed to inappropriate content. C.AI has safety measures like NSFW filters and disclaimers in place, but it’s also important that parents monitor their child’s usage.

Does Character.AI monitor what I type?

Yes, Character.AI monitors what you type. The monitoring is mainly done for detecting inappropriate conversations, safety violations, and Terms of Service violations. Sometimes, chats may also be reviewed by C.AI’s staff.

How do I use Character.AI safely?

Using Character.AI safely means protecting your privacy, mental well-being, and keeping interactions appropriate and secure. For that, avoid oversharing and limit the time you spend chatting to reduce the risk of becoming emotionally dependent.

Mark comes from a strong background in the identity theft protection and consumer credit world, having spent 4 years at Experian, including working on FreeCreditReport and ProtectMyID. He is frequently featured on various media outlets, including MarketWatch, Yahoo News, WTVC, CBS News, and others.