Is ChatGPT safe to use? What to know about data leakage, prompt injection, and more

ChatGPT is safe for general use, as long as you avoid inputting sensitive personal info, and you have a basic understanding of generative AI’s limitations. Keep in mind that it isn’t a truth-telling device and it isn’t “conscious.”

The chatbot burst onto the scene in late 2022, reaching a million users before a week went by. The numbers didn’t stop there, and between summer 2023 and summer 2025 its user base doubled as people saw how it could brainstorm ideas, summarize content, write code, and even hash out mental health issues.

AI optimism and panic surged simultaneously, making ChatGPT more of a historical moment than a simple software program. For this reason, the question “Is ChatGPT safe?” is extra weighty—encompassing not only privacy and cybersecurity issues, but also its impact on society as a whole.

Is ChatGPT legit?

ChatGPT is a legitimate program created by OpenAI, a legitimate company. The chatbot app has even been integrated into Microsoft 365 as Microsoft Copilot, and there’s an enterprise version that had more than 3 million business users in June 2025.

OpenAI started as a nonprofit in 2015 with the goal of building “artificial general intelligence,” or AGI, that would be “safe and beneficial” to humanity. AGI is defined as an AI model that can do human-level work on most economically salient tasks.

The company soon realized that the costs of developing AGI would outpace those available through its nonprofit structure, and in 2019 it added a “capped profit” subsidiary while also beginning its strategic partnership with Microsoft. This structure allowed OpenAI to raise billions in funding, but by mid-2025 the company announced plans to further restructure into a Public Benefit Corporation (PBC) to sustain its long-term research and commercialization goals.

How does ChatGPT work?

ChatGPT is a large language model (LLM), which is a program that’s been trained on gargantuan amounts of text-based data (including blog posts, news articles, Reddit conversations, and even Python code snippets) to recognize patterns in language. The model can then create plausible responses to text-based prompts based on these learned patterns.

You can think of ChatGPT as being an extra-powerful version of autocomplete: a program that will consider up to several paragraphs of input as its context and spit out several more paragraphs of coherent text that adheres to patterns gleaned from the entire internet.

What ChatGPT doesn’t do is consult any sort of “database of truth” before serving up information. When it says that the capital of Japan is Tokyo, that’s because it’s ingested countless sentences referring to Japan’s capital as Tokyo—not because it’s gone to a database of capital cities and consulted the entry for Japan.

This is why anyone asking “Is it safe to use ChatGPT” for things where the truth really matters—say, legal research—will have to understand that they’re getting the most “likely” response according to ChatGPT, and not necessarily the correct response.

Is ChatGPT secure?

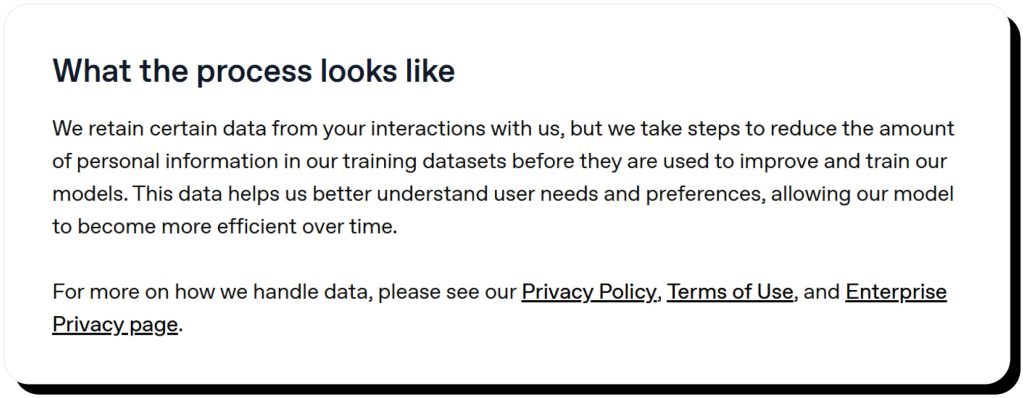

Tension around privacy is at the core of ChatGPT’s business model. The LLM needs to train on new data in order to improve its output, and an easy source of this data is from its users. But it’s possible for users to opt out of this training, and there’s no training by default on prompts entered into ChatGPT Business or Enterprise.

Here are a few measures that OpenAI employs to safeguard users’ privacy:

- Encryption. ChatGPT has both in-transit and at-rest data encryption that adheres to industry standards.

- Two-factor authentication (2FA). Users can add an extra layer of protection to their accounts by going to Settings > Security and enabling 2FA.

- Regular security audits. OpenAI frequently tests the security of ChatGPT by running internal audits as well as having independent cybersecurity firms take a look.

- Bug Bounty Program. OpenAI has invited the global community of developers, ethical hackers, and basically anyone interested to test its system for security flaws.

For organizations using the Enterprise version, ChatGPT provides ways to control user access, particularly with respect to connectors. ChatGPT connectors allow business users to securely link the chatbot to programs like Google Drive and SharePoint, which tend to house sensitive company information. OpenAI offers role-based access control (RBAC) for companies to limit the range of proprietary information each user can access.

OpenAI’s efforts to minimize harmful content

One of the most persistent challenges chatbot makers are facing revolves around harmful outputs. Since ChatGPT was trained on a large portion of the text-based internet, there’s a fair amount of subpar content that went into it (think: violent rhetoric, pornographic content, malicious code snippets).

It’s not possible to remove this data from the LLM after training, so OpenAI uses content moderation filters to detect and block prompts and responses it believes are harmful, illegal, or unsafe. The filters combine automation with human review to evaluate inputs and outputs in real time, reducing the chance that disallowed content is shown. The system also updates continuously as new types of misuse or harmful patterns are identified.

The company issues transparency reports that provide details on how it’s moderating content, combating child exploitation, and responding to government requests for user data.

Security limitations and concerns

There have been some tests indicating that ChatGPT’s search tool could be unduly influenced by hidden content in certain sites and even serve up malicious code. This could be considered a type of “prompt injection”—sneaky instructions that make the model do things it wasn’t meant for.

This goes to show that AI’s “disruptive” use for search may come at the expense of user safety and convenience. Longtime search engines like Google developed ways to detect and penalize hidden text ages ago, back when stuffing white keywords onto a white background was a common spammy SEO technique. ChatGPT, in turn, can’t distinguish between benign and malicious text on its own, so attackers may try to plant hidden content to influence its behavior.

Here’s a quick summary of security issues that careless ChatGPT usage could expose you to:

- Lack of end-to-end encryption. Even though ChatGPT does have encryption, it’s not end-to-end, meaning OpenAI or authorized staff could technically access your conversations while they’re stored on its servers. Moreover, prompts are retained on free and personal accounts for training purposes unless you opt out. As no company is 100% immune to all cyberattacks, there’s always some risk of your information being breached.

- Data leakage. Even if you opt out of the use of your data for training and safeguard your account with 2FA to prevent unauthorized access, leaks may still happen in unexpected ways. This is exactly what happened earlier this year when users discovered that conversations they had shared with the platform’s “Share” feature were being indexed by Google and appearing in search results. The issue stemmed from a small “Make this chat discoverable” option, which many users misunderstood as private sharing when it actually allowed search engines to crawl the content. In another accident that happened in March 2023, a bug in an open-source library allowed some users to briefly see other people’s chat titles and sent subscription confirmation emails with payment information to the wrong people, which exposed sensitive details like billing addresses and the last four digits of credit cards.

- Prompt injection. Usually, we think of prompt injection as something a hacker might do to “jailbreak” the AI and get it to ignore safeguards or leak sensitive instructions. However, it can also happen indirectly, in which an unsuspecting user becomes a victim of misaligned AI behavior due to malicious prompts hidden in documents or webpages that get into a training dataset.

- Model poisoning. Attackers can deliberately corrupt how an AI system learns, making it produce biased, inaccurate, and even harmful outputs. They do so by injecting malicious or misleading data into the model’s training or fine-tuning process (such as seeding fake or manipulated content into public datasets, or subtly altering examples so the AI picks up the wrong patterns). The danger is that poisoned models can appear to function normally while actually undermining trust, safety, and integrity at scale.

Is ChatGPT trustworthy?

This is a multi-layered question. First, there’s the issue around trusting the outputs that ChatGPT provides. Second, there are some concerns around OpenAI’s trustworthiness and whether the company is really acting in society’s best interests. Third, there’s plenty of palpable fear regarding AI’s potential to harm humanity in the distant (or not-so-distant) future.

The first layer is the easiest to address. Nobody should be taking ChatGPT’s output as gospel. (Unfortunately, there are plenty of people who do.) As a predictive text machine, it’s designed to give a plausible (or plausible-sounding) answer, not necessarily a correct one. It can be right enough to be helpful, but you should always double-check sensitive factual information.

Why might people not trust OpenAI? A lot of this distrust stems from observations that CEO Sam Altman and other AI leaders seem to be prioritizing profit over AI safety, with a focus on releasing consumer-ready products before the products (or the consumers) are really ready. The resignation of several prominent people from OpenAI’s safety team also raised alarm bells. In particular, Jan Leike, who co-led OpenAI’s Superalignment team, resigned in May 2024 citing disagreements over the company’s shift toward rapid product launches at the expense of long-term safety. His departure, along with that of co-lead Ilya Sutskever and other researchers, led to the disbanding of the team and fueled concerns that OpenAI was de-emphasizing safety in favor of commercialization.

As to the question of whether artificial general intelligence (AGI) will be good or bad for humanity, there are many prominent voices on both sides of the debate. Sam Altman predicts a “gentle singularity” that will usher in a utopia of sorts; computer scientist and AI safety expert Roman Yampolskiy has quite the opposite view of things. As of now, those are only predictions and it’s hard to tell how everything will play out—after all, people feared the industrial revolution as well. But one thing is certain—there need to be laws and regulations that will limit how AI chats and programs collect and use user data, ensuring privacy and integrity.

Legal and ethical concerns

If AI is likely to destroy humanity, as Yampolskiy and others warn, there’s certainly cause for OpenAI to tread more carefully on the quest to build AGI. However, we don’t need to look into the future to spot legal and ethical issues, as OpenAI is embroiled in some lawsuits right now—notably, with the New York Times.

Here’s a quick rundown of the ethical dilemmas that ChatGPT raises:

- Safeguarding intellectual property. Creators often are not notified when their work gets used to train AI models. In some cases, this may include copyrighted work. For example, the New York Times copyright case alleges that OpenAI used its article content without compensation or permission.

- Preventing academic misuse. Sure, there used to be so-called “essay mills” where students could pay someone else to write their school essays for them, but generative AI makes things way easier. Teachers and professors are so caught up in trying to detect ChatGPT contributions that it’s even harming students who didn’t use AI.

- Avoiding unfair bias in AI-based hiring. We’ve found ourselves in a world where job candidates use AI to write their resumes, and hiring managers use AI to read them. Aside from the impersonality of it all, there’s a non-negligible risk of bias baked into systems like ChatGPT. Because the model reflects patterns in its training data, it can unintentionally favor or disadvantage certain demographics, perpetuating inequalities that already exist in the job market.

- Keeping people safe from harmful content. Despite OpenAI’s efforts to keep ChatGPT “aligned”—that is, only offering up outputs that are beneficial to the user and to society as a whole—preventing harmful content is proving to be quite a challenge. Currently, OpenAI is facing a lawsuit from the parents of a teenager for whom the chatbot became a “suicide coach,” and other AI companies are seeing similar tragedies arise from their services.

Best practices for using ChatGPT safely

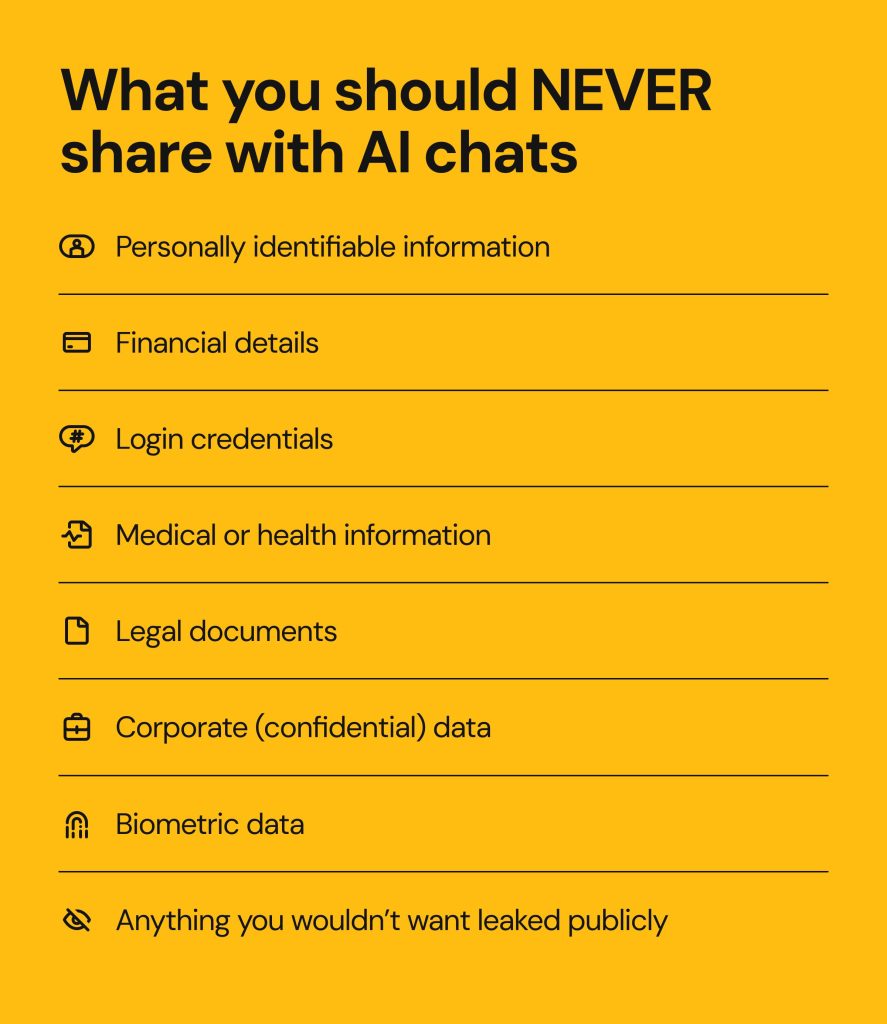

Safe ChatGPT use depends on a number of things. First off, you want to safeguard your personal info and avoid using the service in a way that could put you at risk of identity theft. Secondly, it’s important to make sure you’re steering clear of trusting false information or anything that could harm your mental health.

Here are a few rules of thumb:

- Don’t put any sensitive info into the chat. Don’t tell it your address, your bank information, your passwords, or anything that scammers could use to break into your accounts or impersonate you.

- Don’t sign in with a third-party app. Signing up with Facebook or Google may open up a door for these applications to exchange data on you, which could erode your digital security. A better option is to create a unique password and enable 2FA.

- If you’re an organization, implement zero trust. A zero-trust framework means that you always validate users and their devices before granting access to sensitive information.

- ALWAYS verify ChatGPT’s output. ChatGPT isn’t foolproof; it’s been known to provide false information. Even the sources it cites could be false too. Always double-check for veracity.

Final thoughts: is ChatGPT safe in 2025?

If you’re using ChatGPT responsibly and double-check any info that you may be relying on, it’s safe. It can be a useful tool for brainstorming, summarizing, and helping you pass the time—but it’s not a foolproof research assistant.

The program itself isn’t malicious and implements measures to protect user privacy. If you’re practicing good cyber hygiene and keeping your sensitive data out of the chats, you’ll likely be able to use the service without incident.

FAQs

How safe is ChatGPT?

ChatGPT is safe as long as you avoid submitting any personal data to your chats and verify any information that the service provides (never take replies as factual).

Is ChatGPT safe from hackers?

Even though no online platform is 100% immune to hackers, ChatGPT hasn’t experienced any successful attacks to date. So far, the leaks have happened due to misuse, misconfiguration, and design flaws.

Is the ChatGPT app safe?

The ChatGPT app is generally safe to use, as it encrypts data, implements account protections like 2FA, and undergoes security audits. However, it isn’t end-to-end encrypted, so OpenAI can technically access stored conversations. Moreover, prompts in free and paid personal accounts may be logged for training unless you opt out. Importantly, the main risks come not from the app itself but from how users interact with it—such as oversharing sensitive information, relying on unverified answers, or downloading fake ChatGPT apps from unofficial sources.

Is it safe to sign up for ChatGPT?

It’s safe to sign up for ChatGPT as it’s a secure service that uses encryption and follows industry security standards. However, it’s better to avoid signing up using your Google or Facebook account, but create a unique password instead. It’s also crucial that you enable 2FA.

Mark comes from a strong background in the identity theft protection and consumer credit world, having spent 4 years at Experian, including working on FreeCreditReport and ProtectMyID. He is frequently featured on various media outlets, including MarketWatch, Yahoo News, WTVC, CBS News, and others.